Business Scaling & AI: From Pilots to P&L

Oct 04, 2025

From my vantage point, we’re at the AI “Tip of the Spear” and most Leaders don’t lack AI ideas; they lack repeatable ways to turn those ideas into measurable results. The most common pattern I see with AI is “pilot purgatory”—isolated experiments that excite stakeholders but never move unit economics. To scale with confidence, I recommend to my clients that we treat AI like any other revenue-bearing product: anchor it to clear business outcomes, run it on a disciplined operating model, and prove value on a predictable cadence. Research from McKinsey and others shows rapid genAI adoption; the winners are the organizations that combine adoption with governance and measurement that executives—and regulators—trust (see McKinsey’s ‘State of AI‘ and PwC’s ‘Sizing the Prize‘ for context).

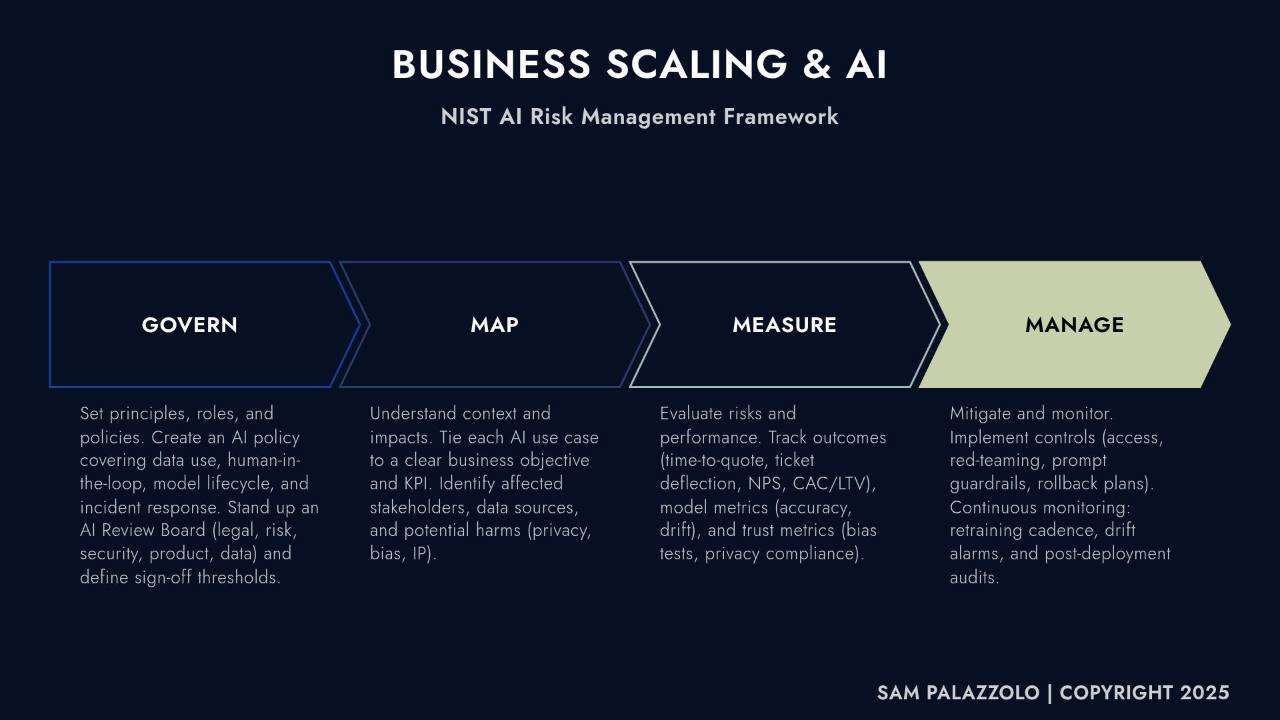

A Pragmatic Operating Spine: NIST AI Risk Management Framework

The NIST AI Risk Management Framework (AI RMF 1.0) offers a concise, vendor-neutral backbone for scaling AI responsibly. Its sequence—Govern → Map → Measure → Manage—is simple enough to start quickly and robust enough to satisfy risk, legal, and security stakeholders. (NIST AI RMF 1.0: PDF and Playbook.)

Govern: Set the rules before you write prompts

- Publish a two-page AI Policy v1 covering data use, human-in-the-loop, model lifecycle, and incident response.

- Establish an AI Review Board (legal, risk, security, product, data) with clear sign-off thresholds and escalation paths.

- Define minimum documentation for every use case: business objective, KPI, data sources, and expected risks.

Map: Tie use cases to money and risk

- Select 2–3 use cases that touch revenue, cost, or risk (e.g., time-to-quote, conversion, claims leakage).

- Identify stakeholders, workflows, and potential harms (privacy, bias, IP) and document where humans remain accountable.

- Validate data readiness (quality, lineage, access controls) before building.

Measure: Track outcomes—not activity

- Instrument business KPIs (cycle time, margin, NPS, CAC/LTV), model metrics (accuracy, drift), and trust metrics (bias tests, privacy compliance).

- Establish a Quarterly AI Value Review that funds winners, sunsets laggards, and updates the risk register.

- Report results in the language of P&L and operational KPIs—not “number of prompts.”

Manage: Mitigate, monitor, and scale what works

- Implement controls (role-based access, red-teaming, prompt guardrails, rollback plans).

- Monitor for drift, schedule retraining, and run post-deployment audits.

- Productize successful pilots with SLAs, dashboards, and clear ownership in the operating model.

Real-World Examples: What Good Looks Like

Operations—Intelligent Triage.

A services organization consolidated disparate intake queues and deployed an AI triage assistant with human-in-the-loop controls. By “Mapping” the use case to a single KPI (first-touch resolution) and “Measuring” both model quality and operational outcomes, the team cut cycle time and reduced escalations. Governance addressed privacy and IP questions up front, enabling faster scale-out to adjacent workflows.

Revenue—Guided Selling and Prompt Discipline.

A go-to-market team replaced ad-hoc prompting with a documented playbook: approved data sources, prompt templates, and quality checks embedded in the CRM. Outcome metrics (opportunity velocity, win rate) were tracked alongside trust metrics (hallucination checks, brand-voice adherence). The “Manage” layer included red-team reviews before seasonal campaigns and a rollback plan tied to customer-impact thresholds.

Finance—Variance Analysis at Month-End.

FP&A automated the first pass of variance explanations using a controlled set of ledgers and narratives. Human reviewers approved outputs above a confidence threshold; lower-confidence items were routed for deeper analysis. The result: faster close, more consistent narratives, and fewer handoffs—without relaxing compliance standards.

Metrics That Matter for Scaling AI

Outcome Metrics

Cycle-time reduction, conversion uplift, gross-margin expansion, ticket deflection, cost-to-serve, and customer retention. These are the numbers that finance and the board recognize.

Model and Quality Metrics

Accuracy, precision/recall (where relevant), robustness tests, and drift monitoring. Quality targets should be tied to business risk, not academic benchmarks.

Trust and Risk Metrics

Privacy compliance, bias and fairness tests, explainability where required, incident counts and time-to-remediation. These metrics de-risk scale and accelerate stakeholder buy-in.

Change Management: Enablement as a First-Class Workstream

The best models fail without adoption. Treat prompt discipline and workflow redesign as teachable skills. Build enablement around short playbooks, scenario-based training, and scorecards that show each team how AI changes their day-to-day work. Incentives should reward outcome improvements, not mere usage.

Closing Thoughts: Make AI Earn Its Keep

AI becomes a competitive advantage when you operationalize it with the same discipline you apply to any product or process redesign. Start with high-leverage use cases, apply a clear governance spine, measure outcomes relentlessly, and scale only what pays. Organizations that do this consistently will compound gains—faster cycle times, better decisions, and resilient margins—while staying aligned with evolving regulations and customer expectations.

90-Day Action Plan

- Weeks 1–2: Stand up the AI Review Board, publish AI Policy v1, and shortlist 2–3 use cases tied to explicit KPIs.

- Weeks 3–6: Run a data-readiness sprint, draft prompt standards, baseline metrics, and complete risk mapping.

- Weeks 7–10: Pilot with human-in-the-loop, instrument dashboards, red-team, and document lessons learned.

- Weeks 11–12: Hold a Quarterly AI Value Review, retire weak pilots, and productize the winner with SLAs and monitoring.

If you want deeper context on the adoption landscape, see McKinsey’s State of AI (2024/2025) and PwC’s Sizing the Prize for macroeconomic impact. Pair those insights with the NIST AI RMF to scale safely and measurably.

Sam Palazzolo, Principle Officer @ The Javelin Institute

Subscribe to the Business Scaling Newsletter

Get weekly, practical guidance on strategy, execution, and AI-driven growth. Subscribe here: https://sampalazzolo.com/

SUBSCRIBE FOR WEEKLY BUSINESS SCALING STRATEGIES

REAL STRATEGIES. REAL SOLUTIONS.

We respect your privacy. Unsubscribe at any time.